Goodies from Facebook Safety and Security team

NEW TOOLS TO MANAGE FAKE NEWS ONLINE

On 26th May 2021, Facebook launched new ways to:

- inform people if they’re interacting with content that’s been rated by a fact-checker as well as

- take stronger action against people who repeatedly share misinformation on Facebook.

Whether it’s false or misleading content about COVID-19 and vaccines, climate change, elections or other topics, FB is making sure fewer people see misinformation on their apps. This is being accomplished through:

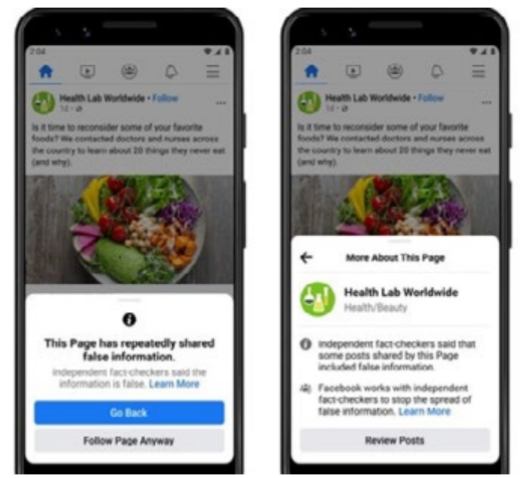

- More Context For Pages That Repeatedly Share False Claims

FB is now giving people more information before they like a Page that has repeatedly shared content that fact-checkers have rated. You will see a pop up if you go to like one of these Pages.

You can also click to learn more, including that fact-checkers said some posts shared by this Page include false information and a link to more information about our fact-checking program. This will help people make an informed decision about whether they want to follow the Page.

- Expanding Penalties For Individual Facebook Accounts

Since launching the FB fact-checking program in late 2016, FB focus has been on reducing viral misinformation. FB has taken stronger action against Pages, Groups, Instagram accounts and domains sharing misinformation and now, some of these efforts have been expanded to include penalties for individual Facebook accounts too.

Starting 26th May, FB will reduce the distribution of all posts in News Feed from an individual’s Facebook account if they repeatedly share content that has been rated by one of our fact-checking partners. FB already reduce a single post’s reach in News Feed if it’s content has been debunked.

- Redesigned Notifications When People Share Fact-Checked Content

FB currently notify people when they share content that a fact-checker later rates, and now these notifications have been redesigned to make it easier to understand when this happens. The notification includes the fact-checker’s article debunking the claim as well as a prompt to share the article with their followers.

It also includes a notice that people who repeatedly share false information may have their posts moved lower in News Feed so other people are less likely to see them.

REMAINING SOBER ONLINE – TAKING APART INFLUENCE OPERATIONS

Over the past four years, industry, government and civil society have worked to build a collective response to influence operations (“IO”), which is defined as “coordinated efforts to manipulate or corrupt public debate for a strategic goal.”

The security teams at Facebook (FB) have developed policies, automated detection tools, and enforcement frameworks to tackle deceptive actors — both foreign and domestic. Working with industry peers, Facebook has made progress against IO by making it less effective and by disrupting more campaigns early, before they could build an audience. These efforts have pressed threat actors to shift their tactics. They have — often without success — moved away from the major platforms and increased their operational security to stay under the radar.

Key Threat Trends

Some of the key trends and tactics observed by FB include:

- A shift from “wholesale” to “retail” IO: Threat actors pivot from widespread, noisy deceptive campaigns to smaller, more targeted operations.

- Blurring of the lines between authentic public debate and manipulation: Both foreign and domestic campaigns attempt to mimic authentic voices and co-opt real people into amplifying their operations.

- Perception Hacking: Threat actors seek to capitalize on the public’s fear of IO to create the false perception of widespread manipulation of electoral systems, even if there is no evidence.

- IO as a service: Commercial actors offer their services to run influence operations both domestically and internationally, providing deniability to their customers and making IO available to a wider range of threat actors.

- Increased operational security: Sophisticated IO actors have significantly improved their ability at hiding their identity, using technical obfuscation and witting and unwitting proxies.

The State of Influence Operations 2017-2020

Platform diversification: To evade detection and diversify risks, operations target multiple platforms (including smaller services) and the media, and rely on their own websites to carry on the campaign even when other parts of that campaign are shut down by any one company.

Mitigations

Influence operations target multiple platforms, and there are specific steps that the defender community, including platforms like FB take to make IO less effective, easier to detect, and more costly for adversaries. Mitigation action includes:

- Combine automated detection and expert investigations: Because expert investigations are hard to scale, it’s important to combine them with automated detection systems that catch known inauthentic behaviors and threat actors. This in turn allows investigators to focus on the most sophisticated adversaries and emerging risks coming from yet unknown actors.

- Adversarial design: In addition to stopping specific operations, platforms should keep improving their defenses to make the tactics that threat actors rely on less effective: for example, by improving automated detection of fake accounts. As part of this effort, FB incorporate lessons from their CIB disruptions back into FB products, and run red team exercises to better understand the evolution of the threat and prepare for highly-targeted civic events like elections.

- Whole-of-society response: We know that influence operations are rarely confined to one medium. While each service only has visibility into activity on its own platform, all of us — including independent researchers, law enforcement and journalists — can connect the dots to better counter IO.

- Build deterrence. One area where a whole-of-society approach is particularly impactful is in imposing costs on threat actors to deter adversarial behavior. For example, FB aims to leverage public transparency and predictability in enforcement to signal that FB will expose the people behind IO when FB finds operations on any of their platforms, and may ban them entirely. While platforms can take action within their boundaries, both societal norms and regulation against IO and deception, including when done by authentic voices, are critical to deterring abuse and protecting public debate.

KEEPING ATHLETES SAFE ONLINE THROUGH THE OLYMPICS SEASON

Athletes will take center stage this summer during the UEFA European Championship, and the Olympic and Paralympic Games. Millions of people across the world will use Facebook platforms (Facebook, Instagram, WhatsApp etc) to celebrate the athletes, countries and moments that make these events so iconic.

However, platforms like Facebook and Instagram mirror society. Everything that is good, bad and ugly in our world will find expression on our apps. So while we expect to see tons of positive engagement, we also know there might be those who misuse these platforms to abuse, harass and attack athletes competing this summer.

Facebook has rolled out new measures ahead of the Olympics to provide further support for athletes and protection from online bullying and harassment. These measures include:

Policies on Hate Speech, Abuse and Harassment

Hate speech is not allowed on FB apps. Specifically, FB does not tolerate attacks on people based on their protected characteristics, including race or religion, as well as more implicit forms of hate speech. When FB becomes aware of hate speech, they remove it.

FB is investing heavily in people and technology to find and remove this content faster. FB has tripled — to more than 35,000 — the people working on safety and security at Facebook and they are a pioneer in artificial intelligence technology to remove hateful content at scale. Between January and March of 2021, FB has taken action on 25.2 million pieces of hate speech on Facebook, including in DMs, 96% of which FB found before anyone reported it. And on Instagram, during the same time, FB took action on 6.3 million pieces of hate speech content, 93% of it before anyone reported it.

FB has also introduced stricter penalties for people who send abusive DMs on Instagram. When someone sends DMs that break FB rules, FB prohibits that person from sending any more messages for a set period of time. If someone continues to send violating messages, FB will disable their account. FB will also disable new accounts created to get around FB messaging restrictions, and will continue to disable accounts that are created purely to send abusive messages.

Tools to Protect Athletes

- Dedicated Instagram Account for Athletes

FB is launching a dedicated Instagram account for athletes competing in Tokyo this summer. This private account will not only share Facebook and Instagram best practices, but also serve as a channel for athletes to send questions and flag issues to the FB safety and security team.

Instagram Direct Message Controls

Because DMs are private conversations, FB does not proactively look for content like hate speech or bullying the same way they do elsewhere on Instagram. That’s why last year FB rolled out reachability controls, which allow people to limit who can send them a DM — such as only people who follow them.

For those who still want to interact with fans, but don’t want to see abuse, Fb has announced a new feature called “Hidden Words.” When turned on, this tool will automatically filter DM requests containing offensive words, phrases and emojis, so athletes never have to see them.

FB has worked with leading anti-discrimination and anti-bullying organizations to develop a predefined list of offensive terms that will be filtered from DM requests when the feature is turned on. We know different words can be hurtful to different people, so athletes will also have the option to create their own custom list of words, phrases or emojis that they don’t want to see in their DM requests. All DM requests that contain these offensive words, phrases, or emojis — whether from a custom list or the predefined list — will be automatically filtered into a separate hidden requests folder.

Instagram and Facebook Comment Controls

Instagram and Facebook “Comment Controls” let athletes’ control who can comment on their posts. Athletes also have options in their privacy settings to automatically hide offensive comments on Facebook and Instagram. With that on, we use technology to proactively filter out words and phrases that are commonly reported for harassment. Athletes can also add their own list of words, phrases or emojis, so they never have to see them in comments again.

Blocking

Athletes can block people on both Instagram or Facebook from seeing their posts or sending them messages. FB is also making it harder for someone already blocked on Instagram to contact an athlete again through a new account. With this feature, whenever athletes decide to block someone on Instagram, they’ll have the option to both block their account and preemptively block new accounts that person may create.

Reminding Athletes of FB Safety Tools

FB will remind athletes competing in these events this summer of these tools via an Instagram notification that will be sent to the top of their Feeds. This notification will make it easier for athletes to learn how to adopt and use a number of our safety features, like two-factor authentication and comment and messaging controls.

FB is committed to doing everything they can to fight hate and discrimination on their platforms, but they also know these problems are bigger than FB.

FB looks forward to working with other companies, sports organizations, NGOs, governments and educators to help keep athletes safe.